Harvard’s Dr. CaBot Shatters Records: 60% Perfect Diagnoses, Physicians Can’t Even Tell It’s AI in Blind Test

In a watershed moment for artificial intelligence in medicine, researchers at Harvard Medical School have developed an AI diagnostician so convincing that 74% of physicians couldn’t distinguish its diagnostic reasoning from actual human experts—and it achieves higher diagnostic accuracy than a baseline of 20 board-certified physicians.

The system, called Dr. CaBot, just made history on October 8, 2025, becoming the first artificial intelligence ever to publish a diagnosis in the New England Journal of Medicine’s legendary Clinicopathological Conference series—a 125-year-old medical institution that has shaped how physicians learn diagnostic reasoning.

The implications are staggering: AI hasn’t just caught up to human diagnosticians—it has surpassed them.

The Landmark Study: A Century of Medical Cases

Published as a comprehensive research paper on the arXiv preprint server on September 15, 2025, the Harvard team’s work—titled “Advancing Medical Artificial Intelligence Using a Century of Cases”—represents the most rigorous evaluation of AI diagnostics ever conducted. The researchers analyzed over 7,100 case studies spanning 102 years (1923-2025) alongside 1,021 radiological image challenges to create what they call CPC-Bench—a physician-validated diagnostic benchmark containing 10 different text-based and multimodal medical tasks.

This dataset is the largest and most comprehensive ever applied to AI medical diagnosis.

The Stunning Results: AI Beats Physicians

When tested on 377 contemporary cases, the results demolished previous assumptions about AI limitations in complex medical reasoning:

Diagnostic Accuracy:

- 60% perfect diagnosis on first try (what medical professionals call “top-1 accuracy”)

- 84% of cases had the correct diagnosis within the top 10 possibilities considered—extraordinarily high for complex multisystem disease

- Outperformed a baseline of 20 board-certified physicians

Next-Test Selection (Predicting What Test to Order Next):

- 98% accuracy—the AI system knew exactly which diagnostic test would most efficiently narrow down the possibilities

Blind Test Results—The Plot Twist:

In one of the most revealing findings, researchers had physicians read Dr. CaBot-generated differential diagnoses alongside actual human expert discussions—without telling them which was which. The results:

- 74% of physicians misidentified the source (couldn’t tell if it was AI or human)

- Physicians rated Dr. CaBot more favorably than human experts across multiple quality dimensions including clarity, reasoning, and thoroughness

“In blinded comparisons of CaBot vs. human expert-generated text, physicians misclassified the source of the differential in 46 of 62 (74%) of trials, and scored CaBot more favorably across quality dimensions,” the paper states.

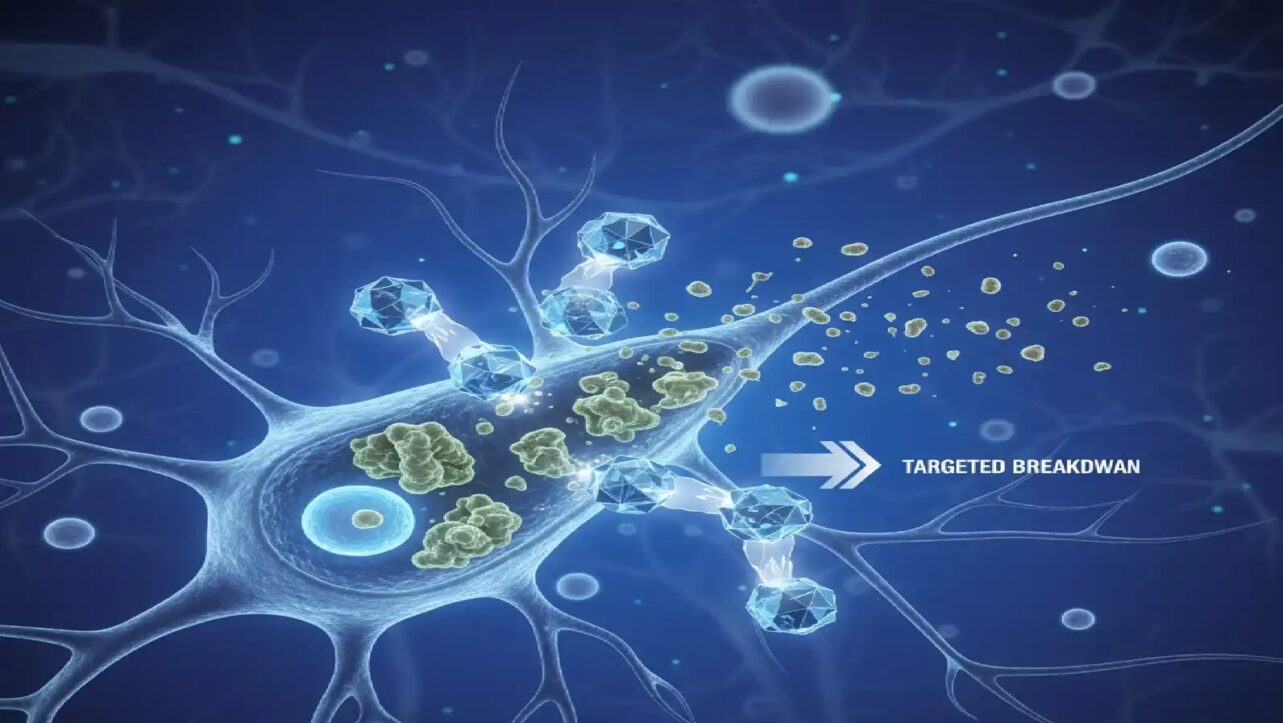

What Makes Dr. CaBot Different From Other Medical AI

Unlike previous AI diagnostic systems that function as “black boxes”—inputting patient data and mysteriously outputting an answer—Dr. CaBot explicitly shows its reasoning process, just like a human expert clinician. The system:

- Generates comprehensive differential diagnoses (listing all possible diagnoses considered)

- Explains each diagnostic possibility and why it was ruled in or out

- Narrates reasoning in natural speech through slide-based video presentations with realistic verbal patterns

- Produces written analyses that match the depth of expert physician reasoning

“We wanted to create an AI system that could generate a differential diagnosis and explain its detailed, nuanced reasoning at the level of an expert diagnostician,” said Dr. Arjun (Raj) Manrai, assistant professor of biomedical informatics at Harvard Medical School’s Blavatnik Institute, who led the research with doctoral student Thomas Buckley.

This transparency is revolutionary. A diagnosis without explanation is useless to clinicians—they need to understand the reasoning to trust the recommendation and learn from it.

The AI Models Behind Dr. CaBot

The research tested leading large language models (LLMs) including OpenAI’s o3 and Google’s Gemini 2.5 Pro. The results showed:

- o3 achieved the highest performance: 60% top-1 diagnostic accuracy and 84% top-10 accuracy

- Gemini 2.5 Pro was competitive, particularly on image analysis tasks

Interestingly, different AI models had different strengths—no single AI dominated all tasks.

Where AI Still Struggles

Despite the stunning diagnostic accuracy, the research revealed important limitations that must be addressed before clinical deployment:

Image Interpretation (Medical Imaging):

- o3 and Gemini 2.5 Pro achieved only 67% accuracy on radiological image challenges

- This is concerning because imaging plays a critical role in many diagnoses

Literature Search and Retrieval:

Conclusion of paper: “LLMs exceed physician performance on complex text-based differential diagnosis and convincingly emulate expert medical presentations, but image interpretation and literature retrieval remain weaker“.

A Historic Moment: NEJM’s First AI Diagnosis

The October 8, 2025 NEJM publication of Medical Case Discussion marks a seismic shift in medicine’s relationship with artificial intelligence, offering a window into Dr. CaBot’s capabilities, showcasing its usefulness for medical educators and students — and hinting at its potential for physicians in the clinic. The Case Records of the Massachusetts General Hospital—CPCs—represent one of medical education’s most revered traditions:

- Dating back to the 1800s when Mass General physicians began using case studies for teaching

- Formalized in 1900 by pathologist Richard Cabot—after whom Dr. CaBot is named

- Published continuously since 1923 by NEJM to teach diagnostic reasoning

- Legendarily difficult: Known for being “extremely challenging, filled with distractions and red herrings”

The fact that NEJM published Dr. CaBot’s diagnosis in this legendary series—for the first time ever with an AI—signals that artificial intelligence has definitively entered the highest circles of medical reasoning.

The Patient Case: A Complex Medical Puzzle

The specific patient who inspired the NEJM publication was a 36-year-old male admitted to Massachusetts General Hospital presenting with a tricky combination of symptoms: abdominal pain, fever, and hypoxemia (dangerously low blood oxygen)—precisely the type of multisystem presentation that can confound even experienced diagnosticians.

Dr. CaBot not only reached the correct diagnosis but presented its reasoning through both written analysis and a narrated 5-minute slide presentation, matching the format human experts use when presenting at CPC conferences.

Not Ready for Your Hospital Yet

Importantly, Dr. CaBot is not yet deployed clinically. The system is designed for medical education and research, not for diagnosing real patients in hospitals—yet. The Harvard team is currently:

- Demonstrating the system at Boston-area hospitals to gather expert feedback

- Refining capabilities in weaker areas like image interpretation

- Planning broader research applications

The Dataset They’re Releasing: CPC-Bench

In a move that could accelerate AI development across the entire medical field, the researchers are publicly releasing both Dr. CaBot and CPC-Bench—the massive physician-validated benchmark dataset spanning 102 years of medical cases and image challenges.

This is enormous. Medical AI researchers worldwide can now benchmark their systems against the same rigorous standards used in this Harvard study, enabling transparent comparison of progress.

What This Means for Medicine

For Medical Education:

- Dr. CaBot could revolutionize how doctors are trained, providing unlimited patient cases with expert-level reasoning explanations

- Students could learn from an AI that never gets tired, never judges mistakes, and always explains its reasoning

For Diagnostic Accuracy:

- Physicians using AI assistance could catch diagnoses they might otherwise miss, particularly in rare disease presentations

- The 98% accuracy in selecting the next diagnostic test means AI could optimize testing pathways and reduce healthcare costs

For Research:

- CPC-Bench and Dr. CaBot provide the research community with standardized benchmarks for tracking AI progress

- Researchers now have a century of validated medical cases to train and test new AI models

For Future Clinical Deployment:

- The roadmap is clear: Improve image interpretation and literature retrieval, then pilot clinical testing

- Within 3-5 years, AI diagnostic assistants like Dr. CaBot could be in hospital systems

The Team Behind the Breakthrough

The research was led by Arjun K. Manrai (Harvard Medical School) and Thomas A. Buckley (Harvard College/Kenneth C. Griffin School doctoral student), along with a 25-person authorship team including radiologists, pathologists, internists, and AI specialists from Harvard and external institutions. The paper lists additional NEJM CPC authors including Michael Hood, Akwi Asombang, and Elizabeth Hohmann.

The Bottom Line

Artificial intelligence has officially crossed the threshold from “interesting tool” to “genuinely outperforms human experts” in complex diagnostic reasoning. The question is no longer whether AI can diagnose diseases—it’s how quickly we can deploy it safely and ethically in clinical settings.

The publication in NEJM, backed by rigorous testing on over 7,000 historical cases and 377 contemporary cases, makes this unmistakable: AI diagnosticians are here, they’re better than doctors at differential diagnosis, and they’re about to transform medical education and practice.